Detecting mood of coworkers in an office using Cognitive Services

With a recent developments in image and video processing it became a trivial task to add a relatively intelligent features to an application. Almost all cloud providers offer today easy to use Machine Learning APIs which allows to perform visual recognition without Computer Science doctorate.

In this project-for-fun me with co-workers decided to build a small system which will leverage Emotion API to get some insights on how people feel during the work.

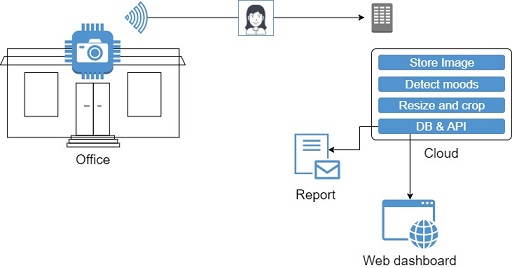

The setup

In the office we installed RPi board with camera. The location of camera can be tricky, because we need to catch as many faces as possible during the day, and ideally different faces. The purpose of this part is to detect image with faces and once detected, the image is sent to the backend.

When an image arrives to the backend we’ll persist it, send it to Cognitive Service, store results and perform some additional image manipulations.

This pipeline allows us send a daily reports of who is the most happy, angry, etc. person and visualize aggregative data - how mood change during period of time, which are dominating moods and so on.

Obviously for us, we must try out other cool toys when building such project - serverless architecture, JS frameworks, 3D printing, IoT boards, etc.

Let’s dig into details.

The device

This device consists of 2 main components - Raspberry Pi and camera connected to it. It all packed into a nice box, which was modeled and printed by my colleague:

The camera located in the right eye of the owl, which allowed to place it near the door of central entrance to observe all people entering the office:

Here are few more shots of the device internals:

Device’s smarts

The device is not that smart, but is has some interesting part in it. What I wanted to prevent is to send the entire video stream outside the device. The optimal would be detect frames that may contain faces (AKA face detection) and send only them. This will save a huge amount of traffic to the backend. But it also means that device’s CPU should be fast enough to execute algorithm ideally 30 times per second.

It is currently RPi 2. It works kinda OK - there are some frames that dropped. The plan is to upgrade to RPi 3.

Face detection performed using OpenCV library. The unfortunate part is that there is no precompiled package of it for RaspberryPi, the fortunate part is that someone wrote thorough instructions for compiling from source for RPi.

The source code which running the board is located here. There are 2 types of workers:

- Detector will analyze each frame using Haar Cascades Classifier and if face/s detected will push the image into in-memory queue

- Sender will de-queue and send the image to the backend

Simple.

Backend

The backend job is to execute pipeline for each received image - receive, store, enrich, transform and expose. We implemented this pipeline using Azure Function. Each function will perform a smallest possible task and they will be connected using Queues and Topics of Azure Service Bus.

The building blocks of the pipeline when image received is self-explanatory and you can also find a flow diagram along with the source code here. The core part of detecting moods located in function SendToEmotion and its essentially sending the image to Azure Emotions API. Down to pipeline the results are persisted into SQL database so they can be later used for reporting and UI.

Exposing results

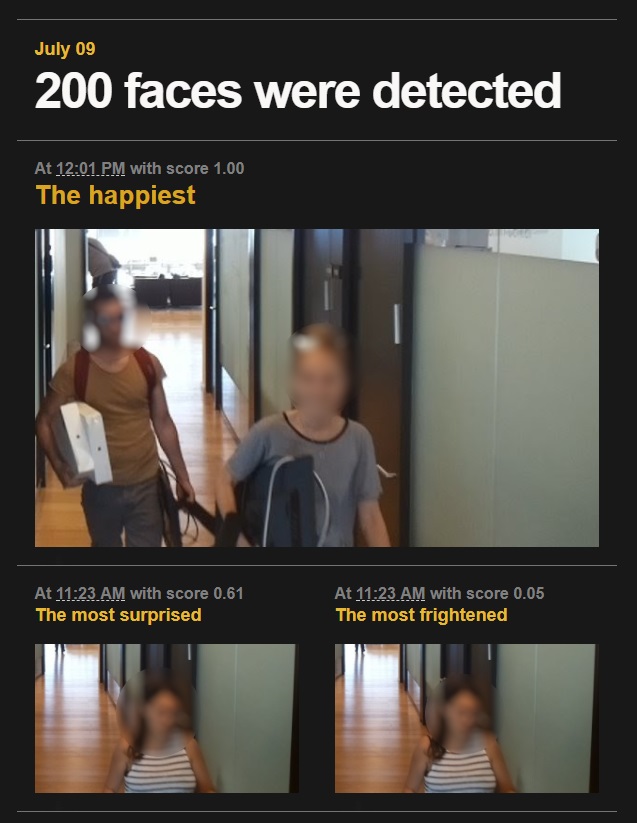

So we started with daily report via email that will show most happy, most angry, etc. people. There was no real value in it beside the fun part:

The next phase was to create a dashboard which shows how moods changes during period of time and aggregate it during time of the workday:

From this we came to conclusion:

- People are usually more happy before breakfast and dinner

- Sad (or tired?) after dinner

- Angry on Sundays

There are a few more screens. The web client app source code is located here.

Summary

The end result is the system that aggregates people’s emotions and exposes those results via reports and dashboard.

We argued a lot whether there is a practical value in such system. For me personally this turned out to be a nice experience and allowed to explore some things that we are not dealing with day-to-day.